Funding

Our research is only possible with the funding provided by a broad range of grants and industry collaborations. We are deeply thankful to all the entities listed below.

Active Grants

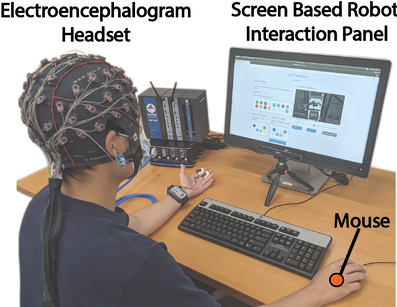

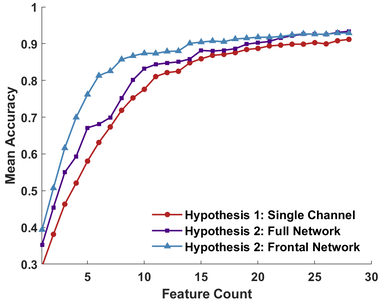

- "NCS-FO: Understanding the computations the brain performs during choice," support by NSF, 09/01/2023 - 08/31/2026

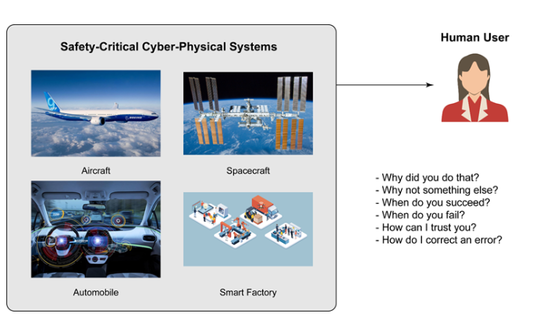

- "Metrics and Models for Real-Time Inference and Prediction of Trust in Human-autonomy Teaming," supported by AFOSR, 11/25/2022 - 11/24/2025

- "Unmanned Aerial Vehicle Swarms for Large-Scale, Real-Time, and Intelligent Disaster Responses," supported by Sony Corporation, 06/17/2021 - 09/30/2023

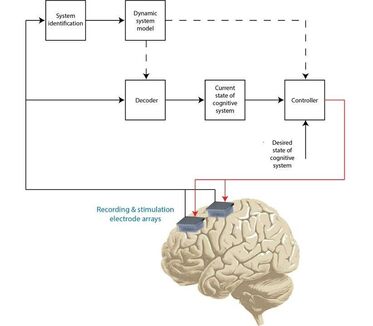

- "NCS-FO: Identification and Control of Neural Cognitive Systems," supported by NSF, 10/01/2020 - 09/30/2024

- "AI Institute: Next Generation Food Systems" (more information here), supported by USDA/NSF, 09/01/2020 - 08/31/2025

- "Habitats Optimized for Missions of Exploration (HOME)" (more information here), supported by NASA, 09/01/2019 - 08/31/2024